I’ve tried out a few different Optical Character Recognition (OCR) services for Canto Read. First I tried out Apple’s Vision Framework, it all happens on the device which is nice, however the results I got weren’t very good. It failed to detect a lot of text, and the text that was recognised often was incorrect. So I thought, “Ahh that’s a bummer” and moved on to using Google’s similarly named OCR service, Google Vision API.

Google’s API produced very accurate results so I used it. A few weeks go by and I’m looking at an image on my iPhone. The image has some text on it, and I press on the text for some reason and noticed the text is highlighted. “Ah cool, they’re using their Vision Framework in the Gallery app”. I try out some Chinese text, and the results are incredibly good. At this point I’m thinking Apple has improved the quality of their OCR, I make a mental note to try it out again in the future.

Trying it out again

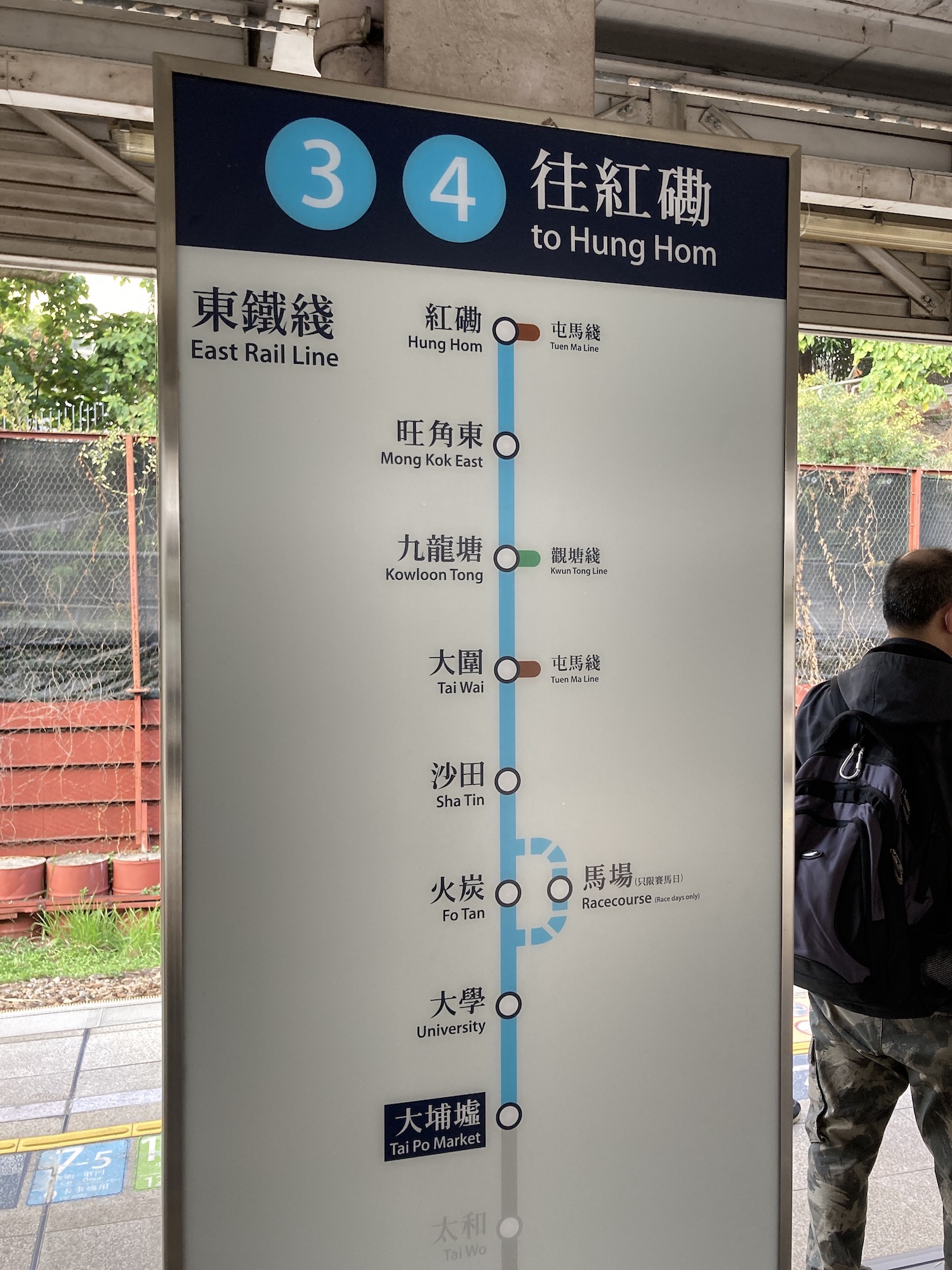

Here’s the experiment we’re going to run:

- Use the Vision Framework to get the text out of the image.

- Do a lot of pinching and zooming on the image in the Gallery app and manually extract the text.

OCR with Vision Framework

The code below triggers an OCR request and prints out the results

func ocrImage(image: UIImage) {

guard let cgImage = image.cgImage else {

print("Failed to get cgImage from image. Exiting.")

return

}

let requestHandler = VNImageRequestHandler(cgImage: cgImage)

let request = VNRecognizeTextRequest(completionHandler: recognizeTextHandler)

request.recognitionLanguages = ["zh-Hant", "zh-Hans"]

request.recognitionLevel = .accurate

request.usesLanguageCorrection = true

do {

try requestHandler.perform([request])

}

catch {

print("Unable to perform the requests: \(error).")

}

}

func recognizeTextHandler(request: VNRequest, error: Error?) {

guard let observations: [VNRecognizedTextObservation] = request.results as? [VNRecognizedTextObservation] else { return }

for obs in observations {

print(obs.topCandidates(1).first!.string)

}

}

Results

往紅磡

屯馬綫

工

九龍塘

東鐵綫

鏐嶄體

參第平

罐鄑甾

™ 旺角東

JeM!el 零

田休

品

南¥

雖野¥

Gallery App OCR

Results

往紅磡

東鐵綫

紅磡

屯馬綫

旺角東。

九龍塘

觀塘綫

大圍

屯馬綫

沙田

火炭

大學

大埔墟

Conclusion

The OCR results from the Gallery app are much better. If anyone knows why I’d love to receive an answer, you can email me at angus@bankstatementconverter.com